Implications of AiPin and Oura Connectivity

Originally posted this in the Humane Discord; lightly edited and expanded thoughts here.

Been seeing some commentary on the NYT piece about chatbots/AI doing better diagnoses than doctors, and my mind went back to a prev convo (in the Humane Discord) on the AiPin being able to connect to the Oura Ring and other fitness/wellness tech…

…ya know something, there’s a medical information issue I didn’t see before that I see now.

Specifically (using Oura as example):

- person connects Oura ring to AiPin

- not only is ring connected, entire Oura account

- person can query on similar aspects to current Oura offerings (sleep times, recovery state, etc)

A use which could be seen might look like:

{taps AiPin} “based on my sleep and activity data over the past year, what types of activities do you recommend for me to…”

Oura isn’t a medical doctor/credentialed physician, nor is Humane. But if the synthesis of the devices and backends together creates conditions for recommendations which mirror diagnoses or prescriptions from credentialed (and insured) persons, what’s the coverage given to a misdiagnosis to Oura/Humane? What is the barrier between “good faith recommendation based on a transformation of data” and “instructions which if followed have negative consequences directly attributed to the recommendation?”

Not a slippery slope either… this is more than someone taking data on their own and synthesizing a solution, or even taking descriptions from (for example) WebMD and then doing the thing. What’s the safeguards that Humane can put in front of such an integration to allow for the benefit of the data transformation (it makes sense) while also not being held liable when (not “if”) the synthesis offers something displeasing, illegal, or taken-out-of-context by the Humane user?

This isn’t a small question… but highlights what ripples behind “can we ingest these things and offer guardrails to unforeseeable futures?”

Direct link to the paper/research

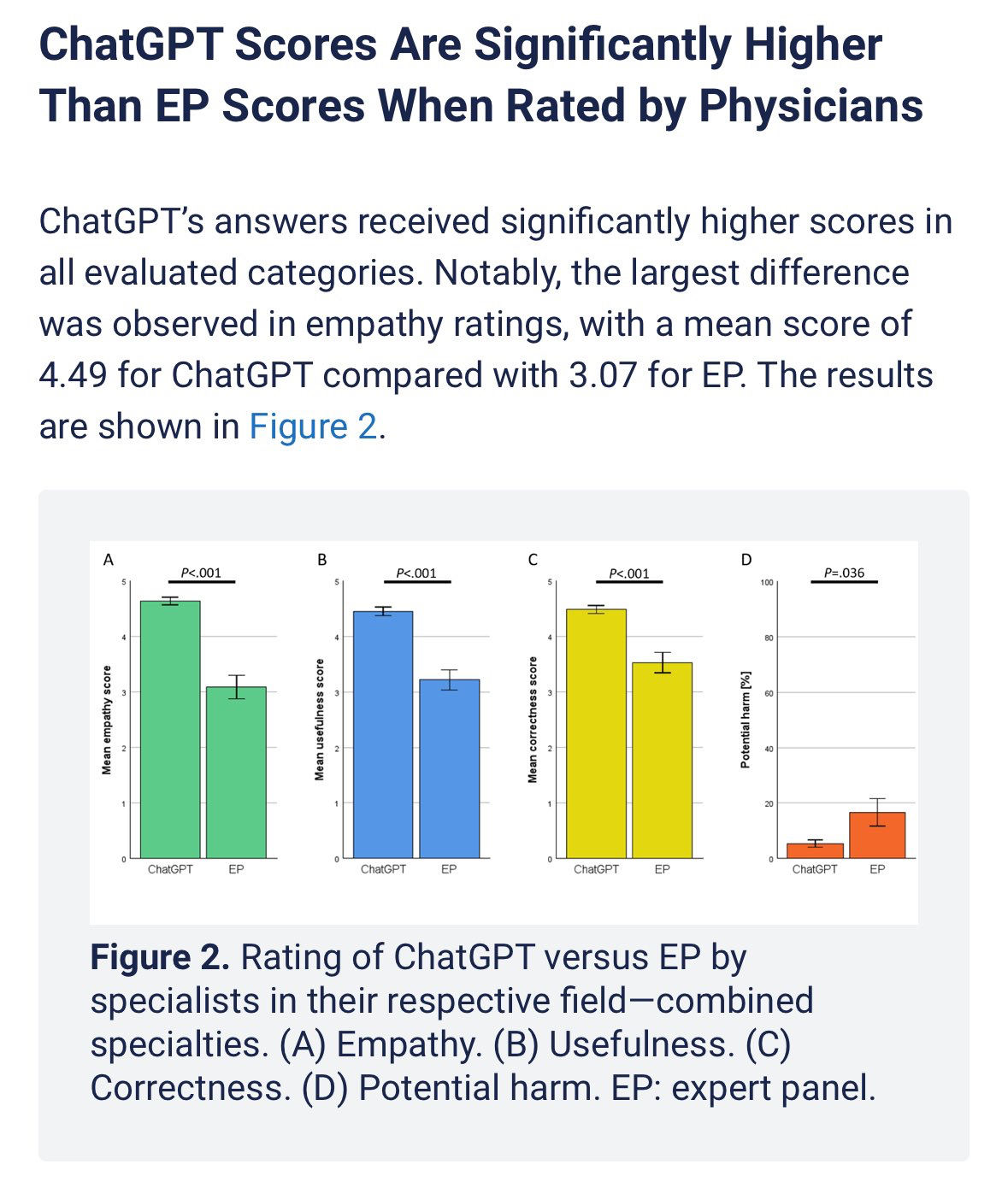

The results indicate that ChatGPT is capable of supporting patients in health-related queries better than physicians, at least in terms of written advice through a web-based platform. In this study, ChatGPT’s responses had a lower percentage of potentially harmful advice than the web-based EP. However, it is crucial to note that this finding is based on a specific study design and may not generalize to all health care settings. Alarmingly, patients are not able to independently recognize these potential dangers.

There was another report/study done and published recently - pointed to in last week’s Notable Reads - speaking to a bias towards understanding and applying LLM/GPT outputs better due to expertise. Without expertise, integrators are going to have to account for liability in ways they might not have considered. Am not sure Humane isn’t thinking about this in some way. Same for Oura, Whoop, and others. If there’s some validity to GPTs being better at diagnosis, it makes sense for folks to use it. Liability is a consideration everyone should want to understand better before promoting.